Some keep talking about restic being the best personal backup system around, while I had to learn that for others, even experienced Linux and MacOS users, it’s an absolute mystery.

Here’s a usage scenario I suggest for restic beginners today.

The Repository Location

The repository is the location where restic will store the backup data.

Let’s not go into local backups or where your particular system mounts removable USB devices. For users with experience on the command line, SFTP will be most approachable for their first off-system restic repository.

The SFTP repository location for this example shall be:

sftp:demouser@backupserver:/home/demouser/restic-repository

I save this to ~/.config/restic/restic-url.txt in my home directory.

Remember to actually create this directory on the destination before initializing it as a repository.

The Repository Password

Repository encryption is derived from the repository password. I have now mostly standardized on random passwords that I keep in a password manager. I save the random password to ~/.config/restic/restic-pw.txt

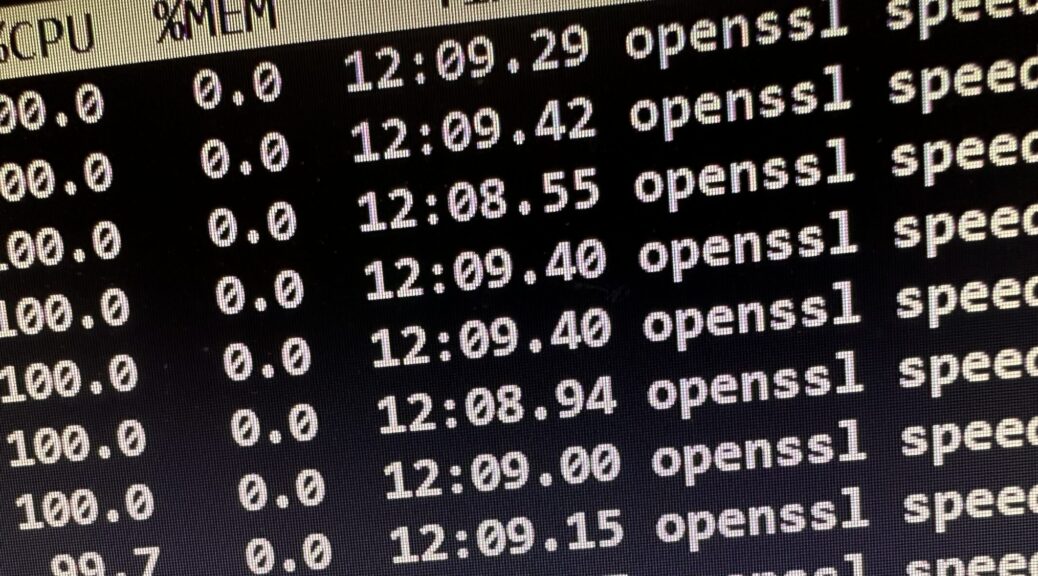

openssl rand -hex 32 > ~/.config/restic/restic-pw.txt

(This file will contain a line break at the end, which is ignored by restic.)

Repository Initialization and first Backup

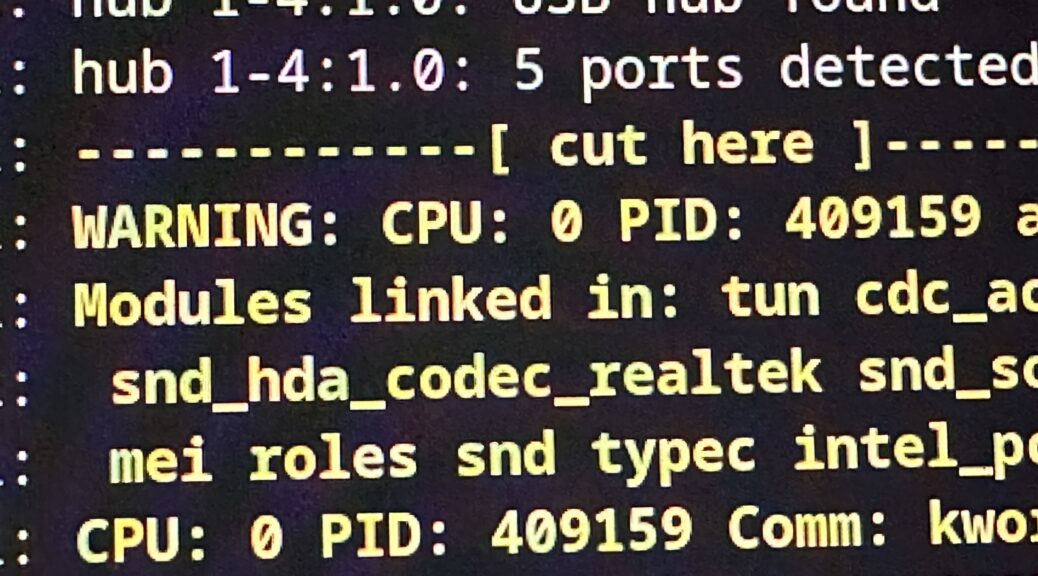

DO NOT PANIC before you’ve seen the next section.

restic init \

--repository-file ~/.config/restic/restic-url.txt \

--password-file ~/.config/restic/restic-pw.txt

restic backup $HOME \

--repository-file ~/.config/restic/restic-url.txt \

--password-file ~/.config/restic/restic-pw.txt

Connecting the Dots

Restic’s extremely basic user interface frequently is in fact a bit of an obstacle in day-to-day-use:

- restic backup requires to always be passed the include paths on the command line.

- Same with restic forget, which requires the retention policy on the command line.

I configure restic’s repository through environment variables:

# ~/.bashrc

export RESTIC_REPOSITORY_FILE=~/.config/restic/restic-url.txt

export RESTIC_PASSWORD_FILE=~/.config/restic/restic-pw.txt

For invocations that require options, I create shell aliases:

# ~/.bashrc

alias restic-backup="restic backup --exclude ./Downloads $HOME"

alias restic-forget="restic forget --keep-last=10"

alias restic-check="restic check --read-data-subset 1%"

With environment and aliases in place, restic-backup will back up without any further options, restic-forget will purge old backups and restic-check will check the integrity of a random subset of repository contents.

Regular Restore

For casual backup browsing, restic-browser does exist, but restic mount ~/mnt and a quick dive into ~/mnt/snapshots/latest is my go-to approach on Linux.

PANIC.txt

As with every backup, you MUST be aware of how to access it in case you need it, and in this case, the restic repository password.

I keep instructions similar to this in my password manager’s text notes, along with the restic repository password itself:

restic mount ~/mnt \

--repo sftp:demouser@backupserver:/home/demouser/restic-repository

Final words

Bootstrapping restic, as it for some reason lacks any sort of configuration file, is a lot harder than it should be. In my work supporting enterprise Linux laptop users, I consistently had a very hard time providing reliable guidance for maintaining their backups. I consider my little drestic wrapper a failure as well.

The above is the bare minimum I can break things down to without straying away too far from stock restic (no wrapper script or shell functions), and with the minor tradeoff of creating the shell aliases.

Have fun and remember to use a password manager. 😊

For restic on Windows, have a look at my previous post.